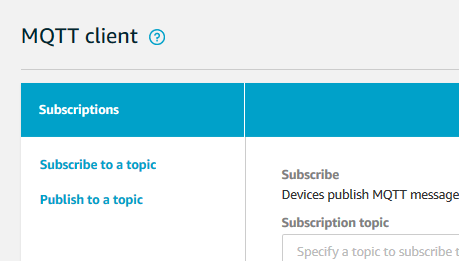

The workshop will use MQTT client as part of IoT Core to simulate the publishing of the data into the Kinesis data stream using AWS IoT Core.

-

Goto the AWS IoT console. Click on test menu in the left.

-

On the MQTT client screen, click on the Publish to a topic link.

-

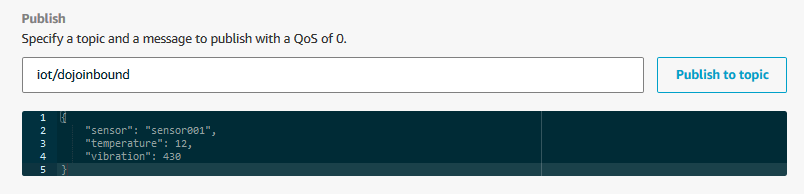

On the next screen, copy-paste the message from the JSON code snippet below and type in iot/dojoinbound for the topic and click on the Publish to topic button.

JSON Code Snippet:

{

"sensor": "sensor001",

"temperature": 12,

"vibration": 430

}

-

Click Publish to topic button multiple times (5, 10, 15 times - up to you) to simulate multiple messages sent by the IoT Device.

-

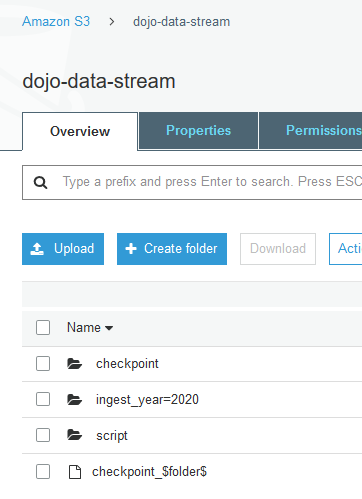

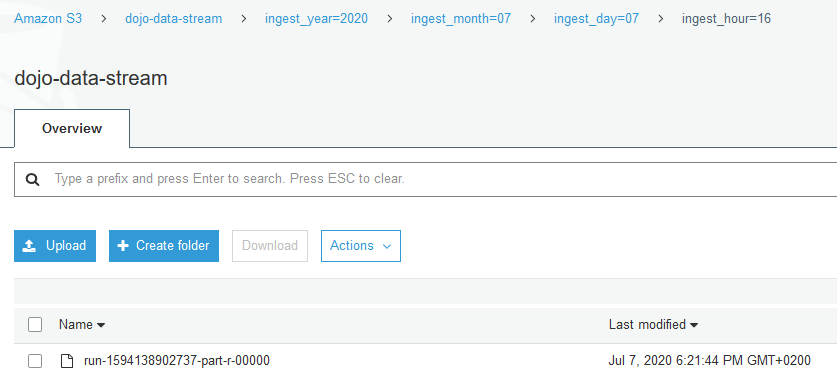

The data is published to the topic by the device. The IoT rule will route the data to the Kinesis data stream and then it will be picked up by already running Glue ETL job to transform and write to the S3 bucket. Goto the S3 bucket and you can see data folder created like ingest_year=2000. The data is by default partitioned by year, month, day and hour. You however can change it in the script.

-

Go through the ingest_year=2000 folder structure and you can see the data file written.

-

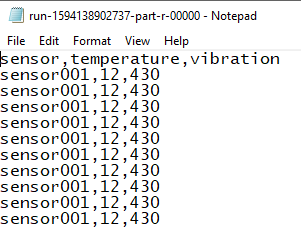

Open the data file, you can see the data sent is transformed into CSV format from JSON format. You will see number of records based on how many times you published the messages.

-

It completes the whole flow. IoT device sent data to IoT core gateway. IoT Rule routes data to the kinesis data stream. The Glue job reads the streaming data and writes to the S3 bucket after transformation.

-

It completes the workshop. Please go the next task to perform the clean-up of the AWS resources so that you don’t incur any cost after the workshop.